Anyone who has put up a web form has suffered from bad form data. We all know what it looks like, "aaaaa", "test@test.com", "1@1.com", "asdf", "mickey mouse", "donald duck", "555-1212", the list goes on.

Anyone who has put up a web form has suffered from bad form data. We all know what it looks like, "aaaaa", "test@test.com", "1@1.com", "asdf", "mickey mouse", "donald duck", "555-1212", the list goes on.Sure, one option is to only provide access to an asset after sending a person a link, in order to ensure that the email address is valid, but there are many situations where you don't want to (or can't) add in this secondary step. Generally, these are real people submitting the form, so the various tools to prevent bots and spammers are of no use either.

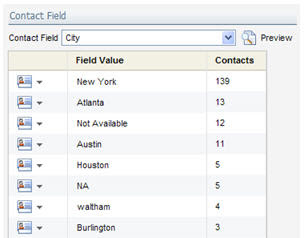

However, having this data in your marketing database does no good either. With it there, your analytics will show incorrect results, segments might pull in bad data, and any of this data that gets passed to sales will immediately decrease marketing's credibility.

Now, to solve this problem, we have a Cloud Connector that does a "junk scan" on your data to look for the typical problems that are seen. It scans first name, full name, email address, and phone number looking for data that is known to be bad or looks suspect, and flags the record in your Eloqua marketing database.

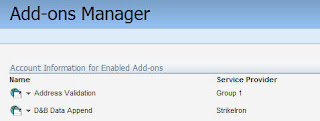

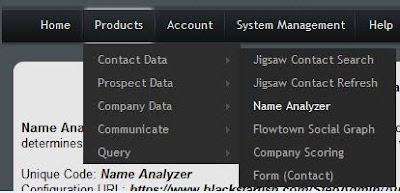

To get started, you’ll need the Cloud Connector installed in your Eloqua instance. This is very easy to do, see the recent post on Cloud Connector Installation instructions for how to add a new Cloud Connector to your install. The Cloud Connector we’ll be looking at here is available on Black Starfish, our repository of interesting connectors. Go to cloudconnectors.eloqua.com and create an account. Under Contact Data, you'll find Name Analyzer - that's the connector to install.

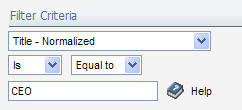

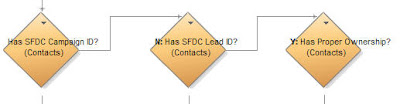

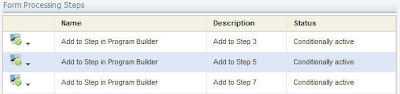

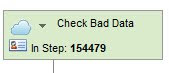

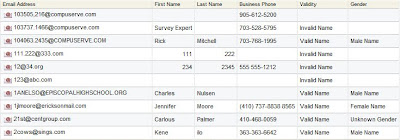

With the name analyzer cloud connector installed, all you need to do is create a step in your marketing automation program - after a web form is submitted, as part of your contact washing machine, or when you analyze your contact data and detect data quality issues. This step will take in contacts, and then the cloud connector will flag them as valid, invalid, or unknown (in a specific field in the contact data), and will also, as a bonus, flag their gender (useful for geographies like Germany where gender is important in building a salutation).

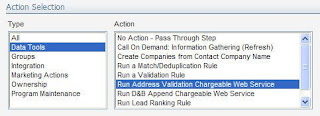

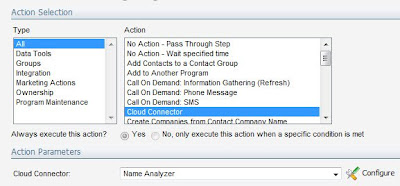

For the step, select "Cloud Connector" as your step type, and you will see a drop-down list of options below. You'll see the "Name Analyzer" cloud connector you just installed via the setup interface in this list. Choose that, and click the "Configure" button to begin setting up the step.

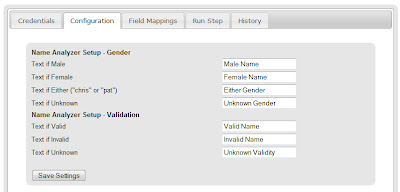

The popup window gives you your configuration options, the majority of which are how you want to flag the contact. You can choose what text you want to mark each contact with for a) gender, and b) validity. For gender, remember that there is an option for first names that could be either gender (ie, "Chris" or "Pat").

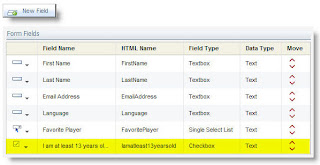

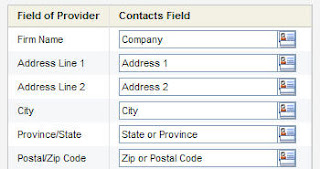

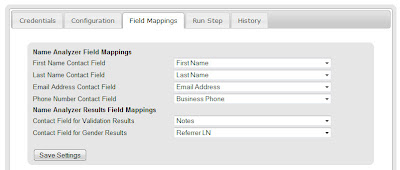

Click save on that screen and move to the field mapping tab. As inputs, it will take first name, last name, email address, and phone number, and as outputs, it will write the text you just selected to fields for gender and validity.

You're now ready to go. The validity analyzer looks at the following to figure out whether a person's contact information is valid:

- First Name: to understand if the name is known to exist (by cross-referencing against a database of known names

- Full Name: looking for known bad names ("mickey mouse" or "donald duck" where the first name may be valid itself ("mickey" or "donald")

- Email Domain: looking for @test.com or @1.com

- Email Name: looking for aaa@ or 111@ as invalid email names

- Phone Number: looking for numbers that are too short, all the same number (11111, 22222), or known to be bad (555-1212)

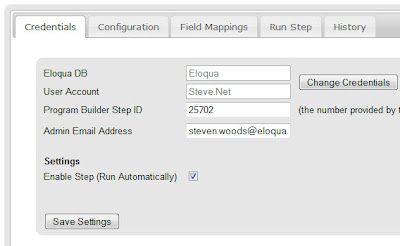

On the "Run Step" tab, you can run the step manually to pull in a few members from the step, and see what the results are, or, if you go to the "Credentials" tab, you can check off "Enable Step" and have the step run automatically.

And that's it, you're done. Now, the contacts that flow through the step will be marked with validity and gender.

Looking forward to your feedback on this. Is this catching and flagging the right garbage names that are input? What other factors do you look for when you're looking at your names manually?